Fall Damage Blog

Performance optimizing a small build system for Unity

We run a small Jenkins installation in Google Cloud. Whenever there are new changes in our source repository, it will grab the latest changes, run tests, build, report back failure/success to people on the team, and make the new game build available if successful.

Our build system worked reasonably well, but I was curious: How cost effective is it? How much can we increase its performance by throwing $$$ at the problem? Are there any architectural changes we could make to speed it up?

Summary

Google Cloud works well for running build machines for Unity projects.

Two VMs with 2 vCPUs, 9GB RAM, and 200GB of pd-ssd storage space is enough to provide our 14-strong development team with all the builds they need.

We would get more concurrent builds by adding VMs. If we want an individual build to go faster, however, then we would need to move to a local build server.

Each build machine in Google Cloud costs about $150/month, plus $50-100/month for the Jenkins master.

Key metrics

There are two processes which we want to make as quick as possible:

- If someone makes a bad change, that person should find out as early as possible.

- If someone makes a good change, there should be a game build available for play testing as soon as possible.

We have the former at about 10 minutes and the latter at about 20 minutes for our current project. This works well for our team; the developers are rarely waiting on build system results. Our project will continue to grow however… and those timings will increase.

Initial hardware/software setup

We have one tiny Linux VM which runs the Jenkins server, and one Windows VM which runs a Jenkins agent. Here is the typical output from a couple of build runs:

Testing, testing…

One nice thing about running the build system on cloud hardware is that you can rent machinery for a couple of hours, and only pay for those hours.

I created a set of Windows PowerShell scripts which rent a VM and configure it as a Jenkins build agent, ready to build Unity projects, and made up a number of test scenarios. Two days later, I had some test data ready for analysis:

I took some captures of resource usage for some configurations as well.

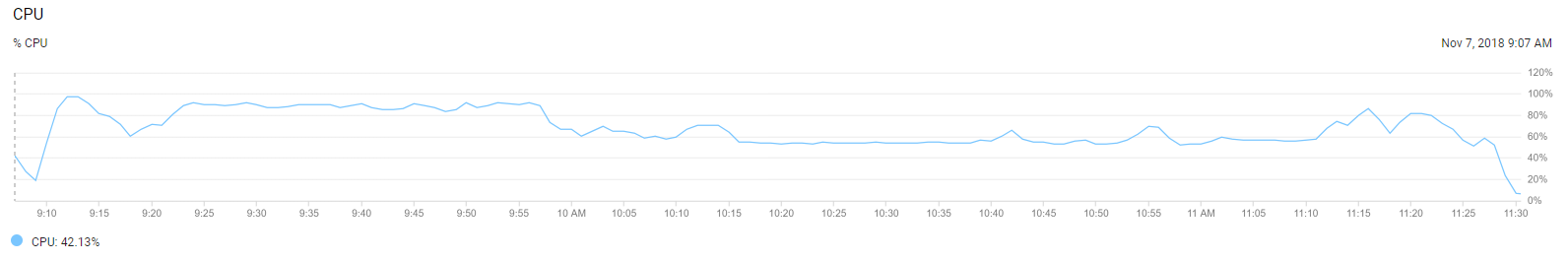

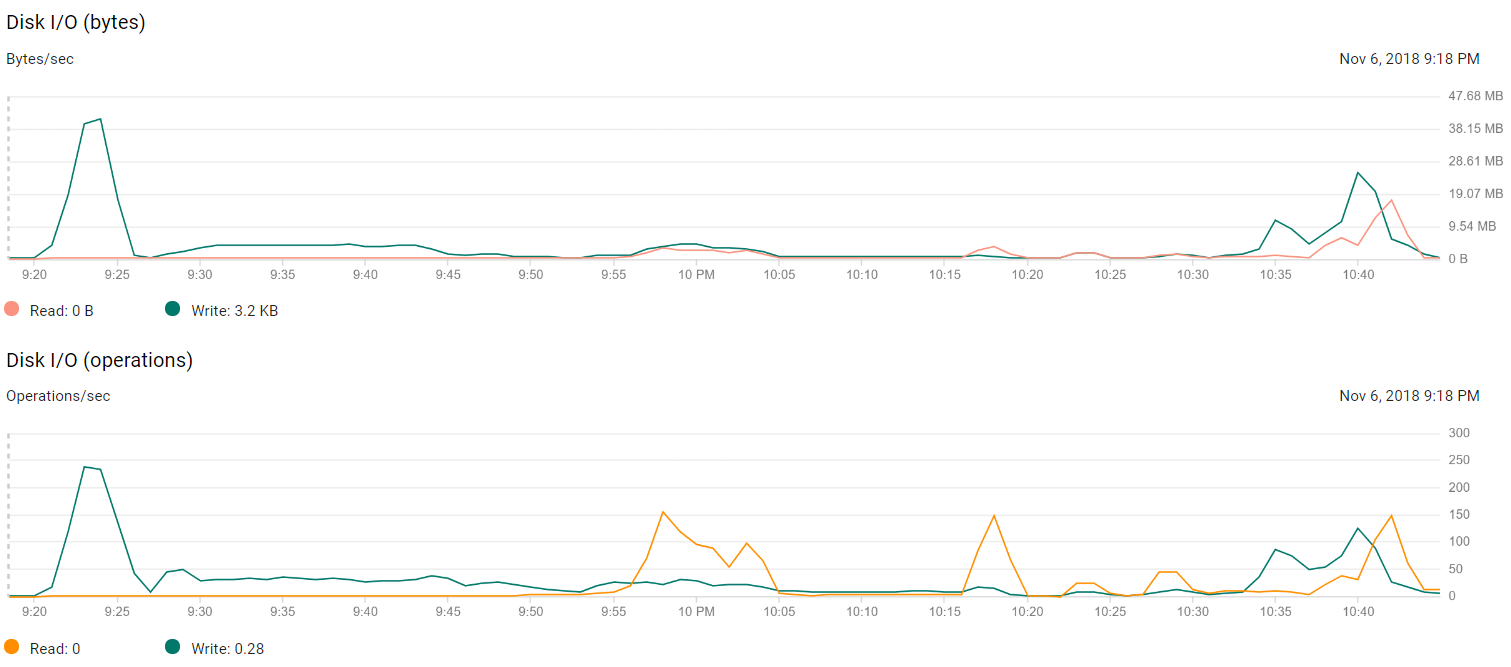

2 vCPUs, full build:

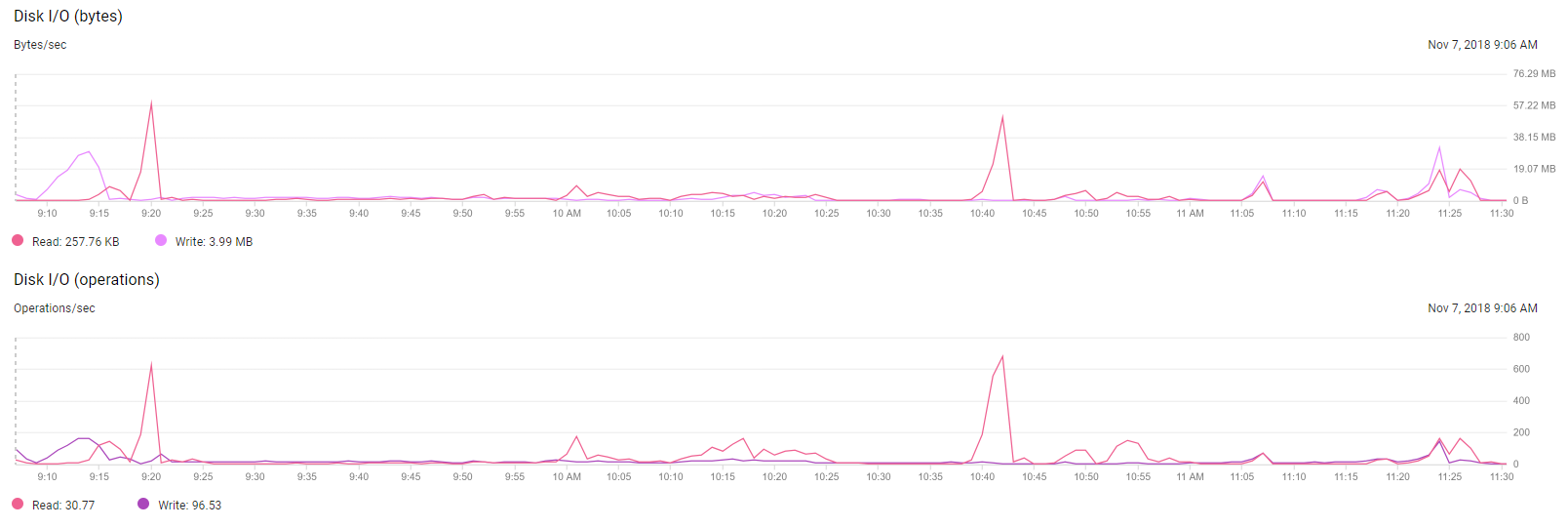

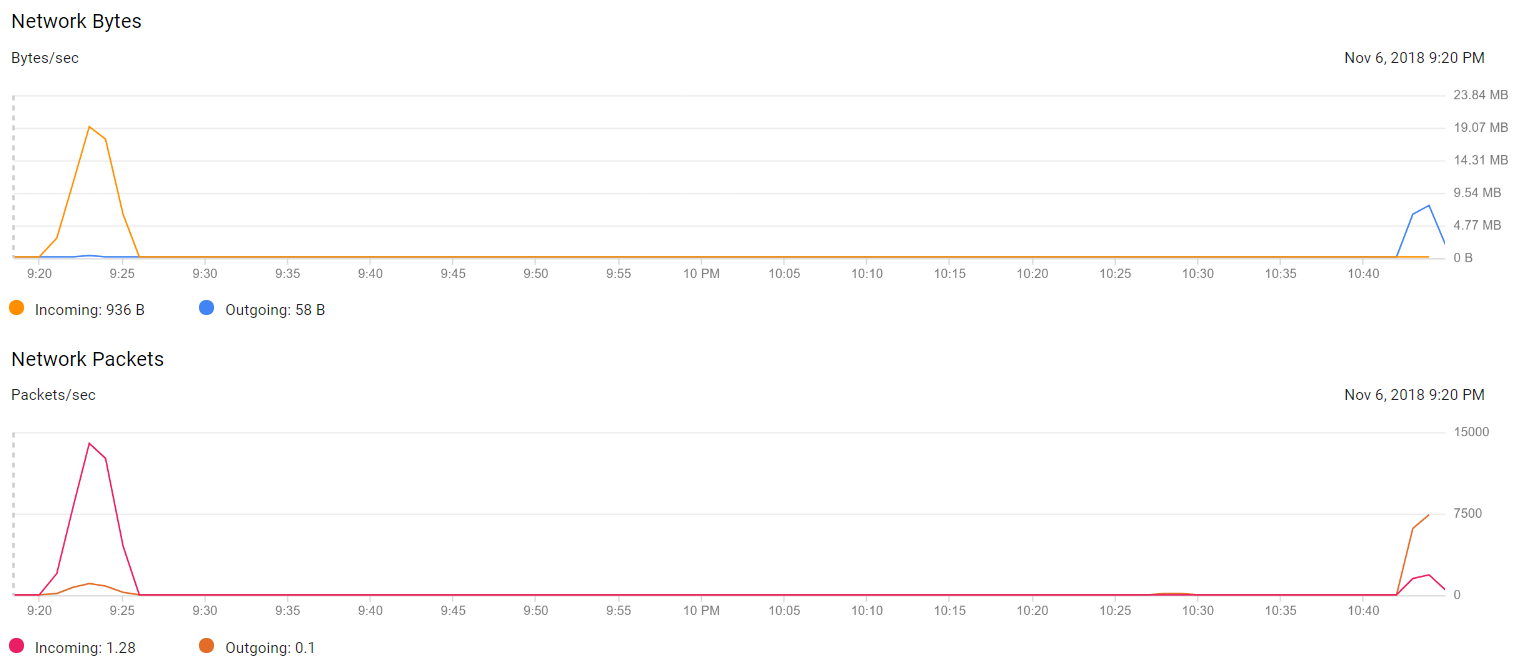

8 vCPUs, full build:

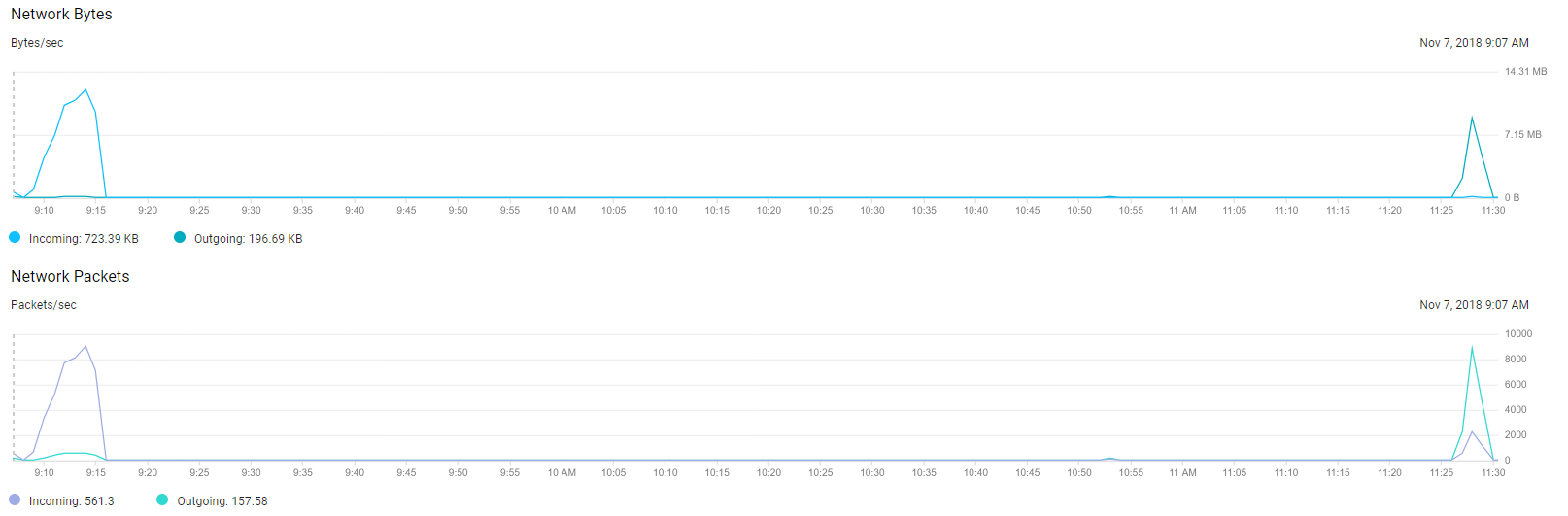

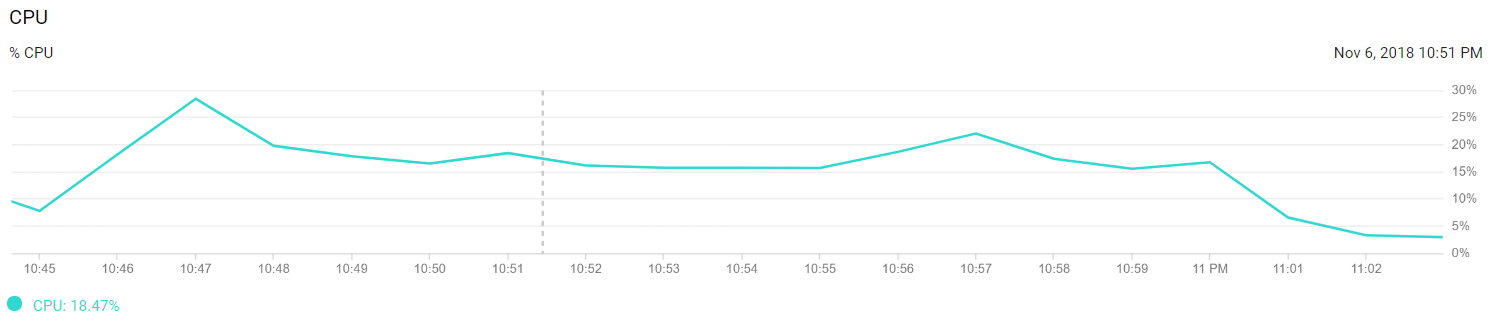

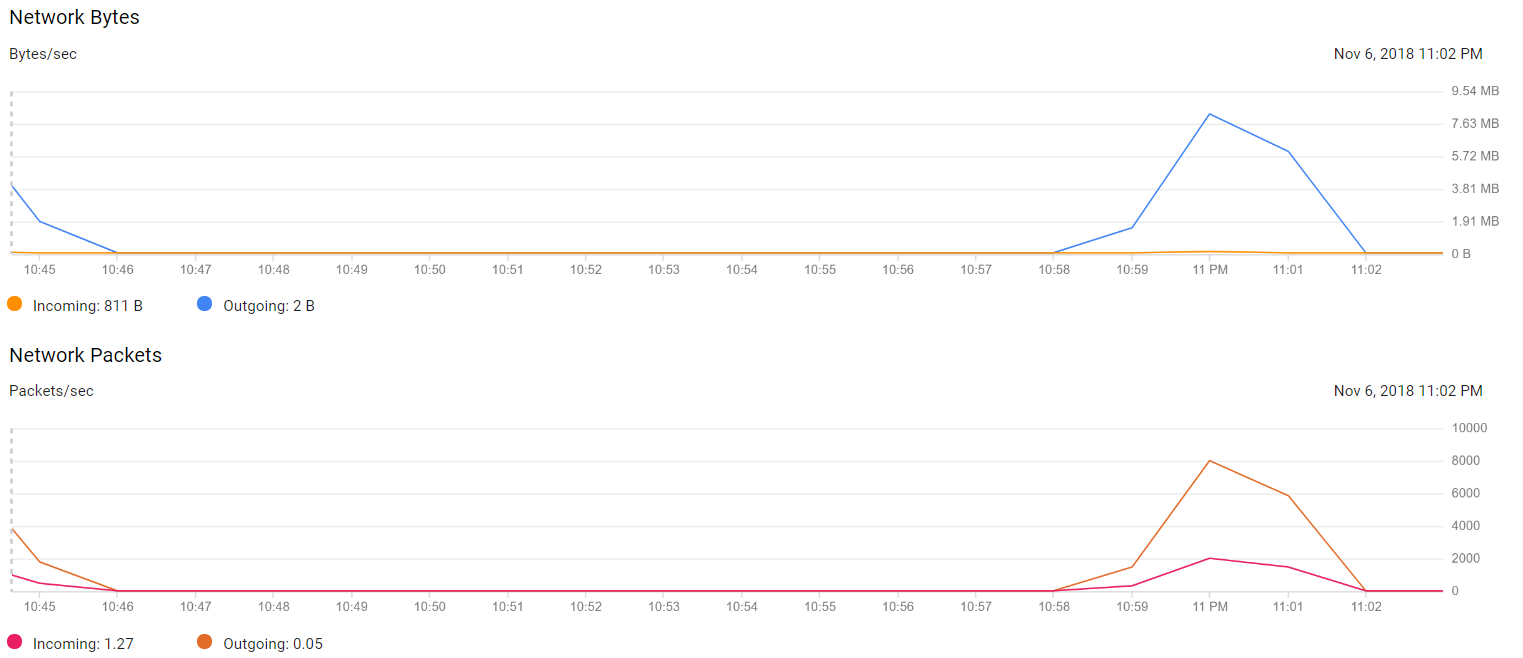

8 vCPUs, incremental build:

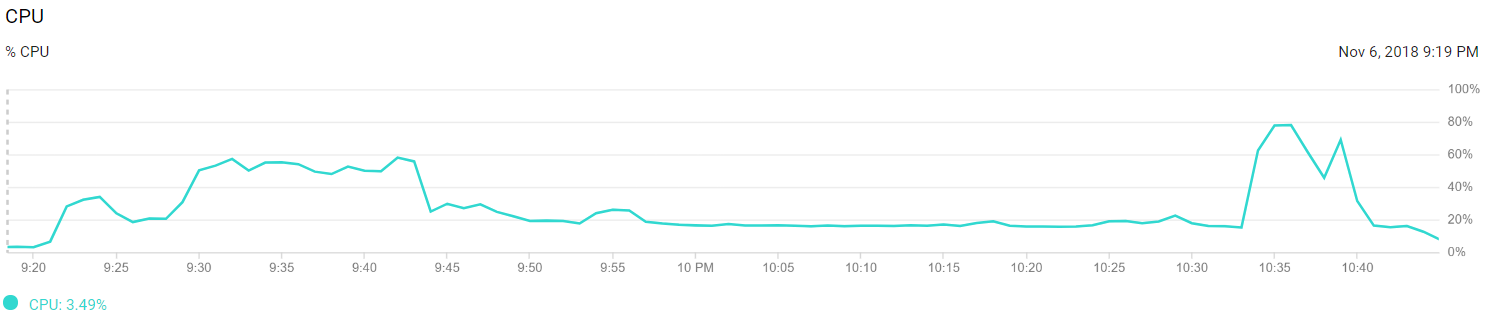

The first thing that stands out from these graphs is that Unity’s build process is not taking much advantage of multiple cores. The spikes in CPU / disk / network at the beginning and end of a full build are the repository checkout, zipping, and Google Drive uploads.

Asset import seems to be capable of using on average 4 vCPUs, but the remainder of a full build – and nearly all of an incremental build – is using on average 2 vCPUs.

Disk access isn’t particularly high volume either, neither when looking at throughput nor IOPS.

The above indicates that many aspects of asset import / test runner / player building is to a large extent a serial process. This is not surprising; making a build process like Unity’s properly multi-threaded and overlapping I/O with CPU work is a huge effort and it is usually not what that customers put at the top of their wish lists.

Takeaways

Have one machine for continuous builds, and another for scheduled builds

We have 8-or-so build jobs; one is “latest version of mainline”, the rest are various Steam configurations, profiling versions, etc. Initially all build jobs were on the same build agent. This made turnaround times unreliable: whenever a scheduled build job kicked off, it would result in delayed feedback about build breaks to the team.

Releases became stressful, because then the scheduled build jobs would need to be triggered more often than usual, making the problem even worse.

Moving to two build machines resolved this: machine A keeps the team informed about build status, and machine B creates all the release builds etc.

Make sure to do incremental builds

Regardless of the machine, an incremental build is 50-90% faster than a full build. You should do incremental builds by default. (If you need to do clean builds, do them during night-time.)

Nearly all major steps – checking out the repository, importing content, and building a player – benefit from incremental builds.

It would be possible to speed up full builds by using a Cache Server… but it makes the build system more complex, and it will not make a significant difference for incremental builds.

More vCPUs help, but only a bit

Going from 2 to 16 vCPUs results in only a 25% reduction in incremental build time. Most of Unity’s build processing does not benefit from the extra CPU cores. On the other hand, machine cost goes up by another 300%.

We decided to continue using 2-vCPU machines.

More memory does not help

Having too little memory will result in Unity crashing. (This happens for us with a 7GB RAM machine.) Once Unity can build safely, adding more memory makes no difference.

We decided to stay with 9GB RAM, but will add more once we start seeing out-of-memory related errors.

pd-ssd is the sweet spot for storage

Google Cloud offers three types of hard disks: pd-standard – standard (non-SSD) located somewhere on their network, pd-ssd – SSDs located somewhere on the network, and local-ssd – SSDs located physically on the machine.

Going from pd-standard to pd-ssd offers a healthy 25% speedup, at a 20% cost increase.

Going from pd-ssd to local-ssd offers another 10% speedup at no cost increase — but the operational procedures are very different. For example, a local-ssd machine cannot be shut down and restarted later.

We decided to continue using pd-ssd for storage.

Run only one build job at a time on a machine

I had initially hoped that it would be possible to run multiple build jobs in parallel on a larger machine, and thereby amortize the cost, while getting better peak throughput. That was a no go:

- Two concurrent builds using the same Unity version resulted in crashes in Chromium Embedded Framework (which is linked into Unity).

- Even if it worked, worst-case the machine would need 2x9GB memory anyway when building two processes at the same time (or else one process would crash when running out of memory).

Even quicker builds require a hand-assembled build machine

There is a significant performance difference – say, 2x – between building on a high-end workstation and building on a VM in Google Cloud. The only way to get significantly quicker builds than in the above test is by moving to hardware that isn’t offered by Google Cloud: machines with few CPU cores, high GHz ratings, no other programs are competing for L2/L3 cache and disk bandwidth, GPUs for certain types of content import, and builds transfer to users via a SAN at the office instead over the internet.

If this is important to you, perhaps it is time to set up an on-premises build cluster? We do not like dealing with hardware, and will stick to Google Cloud as long as possible.